Applies to AnyLogic Cloud 2.5.2. Last modified on April 11, 2025.

This article describes the architecture of AnyLogic Private Cloud, its components, and deployment details.

- To learn more about Private Cloud in general, see AnyLogic Private Cloud.

- To learn how to install Private Cloud, see Installing Private Cloud.

- To learn about customizable configuration files, see Private Cloud: Configuration files.

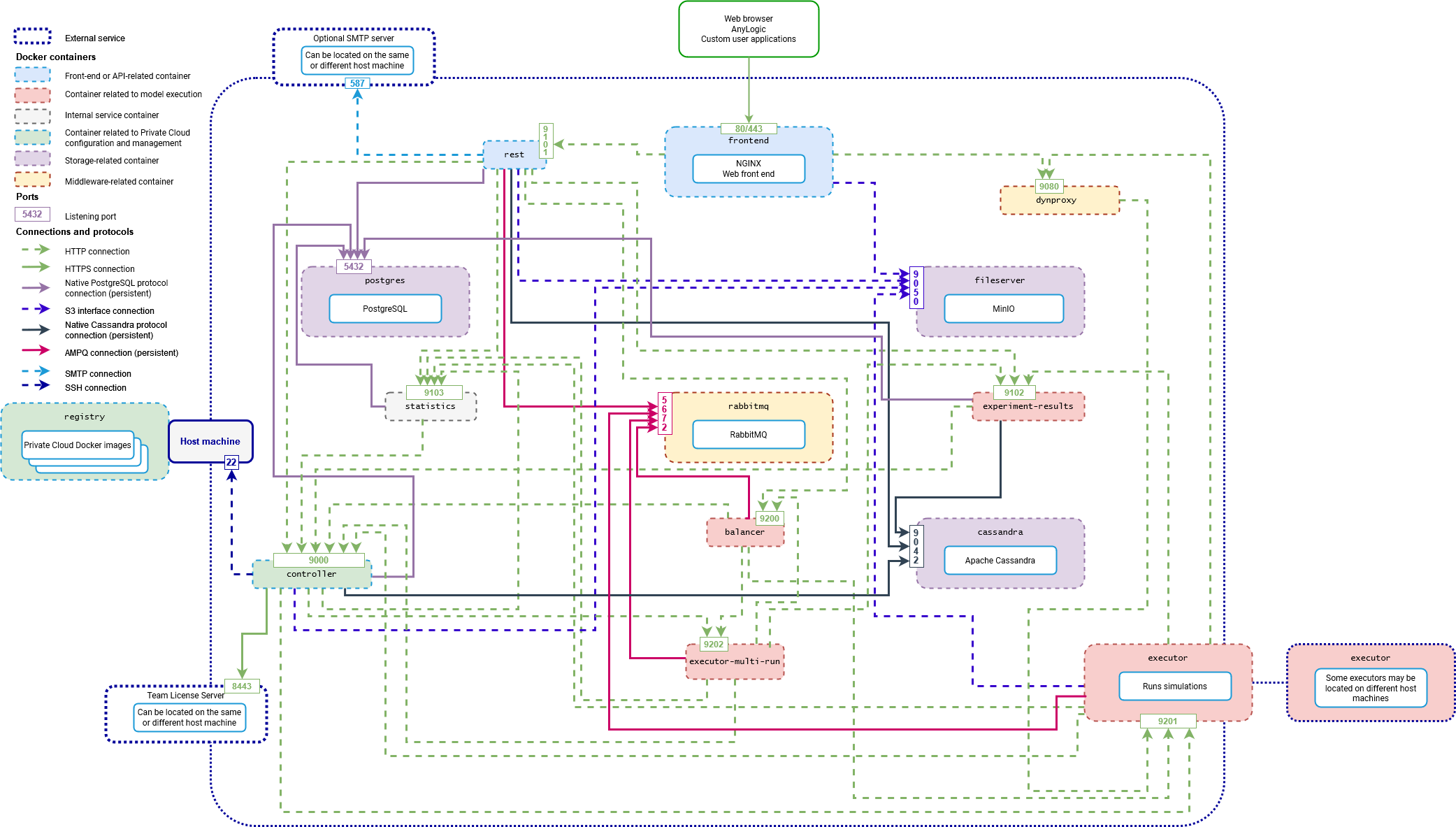

The following is a diagram of Private Cloud components and Docker containers, showing how they interact with each other.

For a more detailed view, click to expand in the new browser tab.

For a more detailed view, click to expand in the new browser tab.

Cloud service components use the standard Docker registry, which is accessed by controller to launch other components. The registry exposes its 5000 port to handle the image management.

All external connections to Private Cloud are made through the frontend service.

Each Private Cloud instance, regardless of the edition you use, consists of the following components:

| Name | Description | Listening ports | Protocol |

|---|---|---|---|

| controller | A key component of Private Cloud. Stores configuration data for the entire instance in the JSON format. Acts as a service discovery provider for instance components. Connects to the host machine through SSH to start other Private Cloud services. Connects to Team License Server over HTTPS to obtain Private Cloud license information. | 9000 — Service discovery, configuration, and orchestration of containers. | HTTP |

| frontend | Provides instance users with the web UI and acts as a reverse proxy for the rest component and storage for public files of fileserver. It also provides the means to visualize the SVG animations of running experiments. | 80 or 443 — The Cloud instance web interface. | HTTP(S) |

| rest | A key service that provides an API for operations performed from the front-end side. It allows models to be uploaded from AnyLogic desktop applications and provides means to execute user-side commands (start experiments, collect data for graphical charts, and so on). |

9101 — Exposes the REST API to users, the frontend component, and the AnyLogic desktop installation, allowing for the management models, experiments, and other instance entities. Also provides an API for services to interact with each other. |

HTTP |

| fileserver | Serves as a repository for files. This includes model files (sources, model and library JARs, additional files), miscellaneous files (user avatars), executor connectors (service files that provide means for executor components to communicate with a model), and so on. | 9050 — Deploying files and resources. | S3 file transfer protocol |

| postgres | Runs 2 PostgreSQL databases. The first stores meta information (user accounts, model metadata, model run data, and so on), while the second stores statistics. | 5432 — Listening for the instance and users metadata (user profiles, models, versions, and so on). | Native PostgreSQL protocol |

| statistics | Collects data about user sessions and experiment runs. It has a database-independent HTTP interface that other services use to interact with this component. | 9103 — Stores run metadata. | HTTP |

| experiment-results | Provides a way to request the aggregate components of multi-run experiments, collect the data, and give an output as requested by the user or web UI. | 9102 — Listening for multi-run experiment results in the postgres database. | HTTP |

| rabbitmq | A messaging queue service, responsible for internal communication between AnyLogic Private Cloud components in the context of handling model run tasks. | 5672 — Running the status queue. | AMQP |

| dynproxy | Acts as an HTTP reverse proxy for SVG animation requests directed to the executor nodes. | 9080 — Manages the animation. | HTTP |

| balancer | Distributes model run tasks between executor components. Depending on settings can also start these components whenever necessary. | 9200 — Listening for tasks to other service components in the Cloud instance. | HTTP |

| executor-multi-run | Controls the execution of multi-run experiments and generates inputs for them. Implicitly splits multi-run experiments into individual model runs based on the experiment type — for example, by generating varied parameter inputs for the parameter variation experiments. | 9202 — Splitting multi-run experiments into single-run tasks, then submitting them to the balancer service component. | HTTP |

| executor |

Executes the model runs: simulation experiments, animations, single-run portions of multi-run experiments are all executed by the executor components. A Private Cloud installation can have multiple executor components. To take advantage of this option, you must have an appropriate edition of Private Cloud. |

9201 — Accepts model run tasks and executes single-run experiments. | HTTP |

| migration | This is a utility service. During Private Cloud upgrade, controller starts this service to migrate the experiment run data from cassandra to postgres and also counts the files stored in the fileserver component in postgres. It then stops. | - | - |

| cassandra |

Legacy: a NoSQL database for input-output pairs of all model versions in Private Cloud that stores run results and run configurations. Not used as of AnyLogic Cloud 2.5.0. |

9042 — Working with run results. | Native Cassandra protocol |

All internal Private Cloud tasks that involve the execution of models have a “tag” (an application label), assigned to them by the rest and executor-multi-run components. The assignment procedure takes into account the logical group of the task.

Experiment tasks are currently divided into 3 groups:

- Simulation tasks represent a single model run

- Animation tasks — calculations needed to display model run animations correctly on the front-end side

- Multi-run tasks (the collections of single-run tasks)

The balancer component reads this tag to determine which component should complete the task, and distributes the load accordingly. For example, if the tag says executor, so it would be passed to one of the executor components, tasks marked with executor-multi-run would be passed to the executor-multi-run component, and so on.

When balancer receives a task, it requests the list of nodes that have the same tag as the received task, from the controller component. After that balancer passes the task to the node in several steps:

-

balancer retrieves information about the capacity of the suitable nodes.

Each executor has a certain number of tasks it can process simultaneously, which corresponds to the number of CPU cores on the node — the machine running the executor Docker container. - The nodes that are fully loaded are filtered out.

- The remaining nodes are sorted by the current load in descending order.

-

balancer starts sending tasks to the first node in the list.

- If for some reason the node doesn’t accept the task, balancer tries to send it to the second node in the list, and so on.

-

If none of the nodes accepts a task, the task will be added to the special RabbitMQ queue consisting of “softly rejected” tasks. balancer checks this queue periodically and tries to restart the tasks hanging there.

The delay between balancer checks for softly rejected tasks is slightly longer than the delay between balancer checks for new tasks. - If a task is rejected too many times, it is sent to another queue consisting of “hard rejected” tasks. The delay between checks there is even greater.

- After several unsuccessful attempts to pass the task from the “hard rejected” queue, the task is dropped.

From a security perspective, it is assumed that the machines running the Private Cloud components are on a private network and are not accessible to regular users of the instance.

For a regular user, a gateway for all interactions with Private Cloud is a machine running the frontend component. All API operations and user-level interactions are routed through an NGINX proxy controlled by frontend, so no other components should be visible or accessible to users.

-

How can we improve this article?

-